3.3.6 Parallel processing

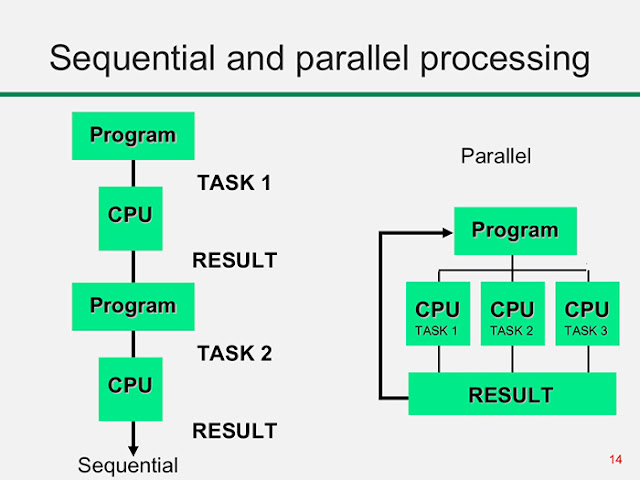

In computers, parallel processing is the processing of program instructions by dividing them among multiple processors with the objective of running a program in less time.In other words it is the simultaneous use of several processors to perform a single job

Four basic computer architectures are

$ single instruction, single data (SISD),

$ single instruction, multiple data (SIMD),

$ multiple instruction, single data (MISD),

$ multiple instruction, multiple data (MIMD)

Single instruction, single data (SISD)

@ A computer that does not have the ability for parallel processing

@ There is only one processor executing one set of instructions on a single set of data.

Single instruction, multiple data (SIMD)

@ The processor has several ALUs. Each ALU executes the same instruction but on different data.

Multiple instruction, single data (MISD)

Multiple instruction, multiple data (MIMD)

@ There are several processors. Each processor executes different instructions drawn from a common pool. Each processor operates on different data drawn from a common pool.

Massively parallel Computers

In a massively parallel computer, large number of processors work to perform a set of coordinated computations in parallel.

Features and Requirements :

HARDWARE CONCERNS

Hence each processor needs a link to every other processor resulting in a challenging topology to design

SOFTWARE CONCERNS

Appropriate programming language is needed which allows data to be processed by multiple processors simultaneously

Hence the process of writing programs is complex. Any normal program needs to be divided into block of codes such that each block can be processed independently by a processor

0 Comments